The Importance of Thinking Across Layer Boundaries

This week we’ve been working on our Congestion Control book, which has required me to read lots of papers and RFCs to get my head around all the different flavors of congestion control that have emerged in the last decade or so. It’s truly challenging to decide what to include and what to omit in a book on congestion control, as there has been an almost endless set of improvements to TCP congestion control since the publication of Jacobson and Karels’ algorithms in 1988. As part of this work I have dug into QUIC, which recently made it through the lengthy process of IETF standardization, and that provided the impetus for this week’s post.

As I recalled in a recent post on Service Mesh, there was a moment early in my career when I realized that strict layering was not always the best way to think about protocols. And that realization hit me again with force when I started to look into QUIC a few years ago. One way to think about QUIC is that, after 30 years of running HTTP over TCP, we’re finally getting a chance to revisit the layering decisions that we’ve lived with since the creation of the Web. And the development of QUIC tells us a lot about layering, as well as the challenges of introducing new protocols to the Internet in its current form.

The design of HTTP/1.0 is a pretty good case study in how layering can go wrong. HTTP, being an application layer protocol, makes the reasonable assumption that the transport layer below it reliably delivers the bytes fed into it. Unfortunately, HTTP/1.0 opened a fresh TCP connection for every object on a web page, which generated a huge amount of extra work and latency to open and close all those connections, including a flurry of three-way handshakes, while ensuring that many connections would be too short to ever get out of the slow-start phase of congestion control. HTTP/1.1 addressed many of these shortcomings, but over time more problems have arisen with the layering of HTTP over TCP. In particular, the addition of another layer (TLS) to secure HTTP compounded the issues to the point where it made sense to consider an alternative transport to TCP. This alternative transport has now been developed as QUIC.

As I’ve read through a few hundred pages of QUIC RFCs, one thing that keeps jumping out is the difficulty of getting layering “right”. Take the layering of transport security for example. Before QUIC, the layering is roughly as follows:

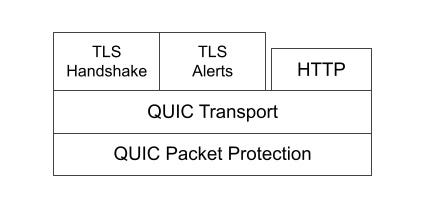

This looks nice and clean, like something straight out of a networking textbook. In RFC 9001, which defines how TLS works with QUIC, however, the picture is less tidy, as follows:

As the RFC says: “Rather than a strict layering, these two protocols cooperate: QUIC uses the TLS handshake; TLS uses the reliability, ordered delivery, and record layer provided by QUIC.”

Why the departure from strict layering? Because the old way of doing things inserted multiple RTTs of delay at every layer. First a three-way handshake to set up TCP, then a TLS handshake to set up the required security (authentication, encryption), then finally the HTTP layer gets to start asking for something like a web page. In fact, these problems of layering are called out in the original QUIC design document. A similar line of reasoning led to the decision not to use SCTP over DTLS, even though at first glance that combination would have met many of the goals of QUIC. The strictly layered approach would again have introduced multiple RTTs of setup delay before HTTP got a chance to send its first application-layer request.

My high level takeaway from this discussion is that while layering makes it easier to think about protocols, it’s not always the best guide to design or implementation. When the designers of HTTP/1.0 thought about TCP, they apparently had a model of “this layer makes sure our commands get to the other side”–which is correct, but massively oversimplifies the situation once you start to think about security and performance. The designers of QUIC, with the benefit of decades of experience of running HTTP over TCP, came to the conclusion that the abstractions offered by TCP and TLS were not quite right for HTTP, and used the requirements of the application layer to revisit the design for the transport and security layers.

The other really interesting thing about QUIC is the realization that middleboxes are a fact of life in the modern Internet. This realization was already dawning at the time HTTP/1.1 was designed (the mid 1990s), as that protocol recognizes the existence of caches and proxies sitting between the true ends of an HTTP connection. But the prevalence of middleboxes such as NATs and firewalls has ballooned since then, and led to the conclusion that the only option for a new transport protocol to succeed in today’s Internet was for it to sit over UDP. (SCTP stands as the example of a new transport that failed to take off for this reason.) In other words, QUIC, a transport protocol, needs to run over UDP, a transport protocol, because the Internet no longer really allows any transport protocol other than UDP or TCP. The only way to get a new transport protocol deployed is to run it over one that already exists (and QUIC over TCP is off the table for reasons that are hopefully obvious).

This isn’t really what was expected when the Internet was designed, and it is the sort of thing that has often been lamented by members of the Internet community who remember a simpler time before middleboxes. But QUIC deals with the world as it is rather than wishing for a different one, and it’s hard to fault that approach.

There is yet one more interesting aspect of QUIC, which is its approach to congestion control. Having just written a thousand words on that topic for our upcoming book, I won’t try to cover it in depth here. But strengths of the congestion control approach in QUIC include (a) collecting all the decades of experience of TCP congestion control into a new transport protocol design, and (b) recognizing that congestion control continues to evolve and thus allowing new algorithms to be added to QUIC over time. If you read RFC 9002 and the work it references, you would actually end up with a pretty good understanding of the advances that have been made in congestion control since 1988. It would, however, be more efficient to read our book (still a draft; contributions welcome).

Now that I’ve invested the effort in understanding the design and rationale of QUIC, I find that I’m quite impressed with the results. I say that as someone who worked on many IETF standards over the years: it’s not an easy environment in which to reach consensus and make progress on a protocol design. The design team seized the chance to accommodate a large amount of collective experience in congestion control, and transport protocols more broadly, into a single design. And the end result shows an awareness of cross-layer interactions that is all too rare.

Our article on Network Verification made it to The Register. Decentralized finance, which we touched on here and here, may still be speculative but it’s at least important enough to get a write up in The Economist. And we survived the 2021 Mansfield Earthquake.